The power of cohorts

You're probably familiar with A/B testing: showing different users different options and measuring which they prefer.

The Mention Me platform is built around the ability to A/B test by cohort. But to understand how, you need to understand why cohort-based testing is needed to make referral work.

Today you'll learn about the power of A/B testing by cohort, what makes our testing platform tick and why it's one of our top engineering challenges.

What are cohorts?

(Other than a surprisingly difficult word to say with a French accent!)

A cohort is simply a group of things.

In our case, it's a group of people who've had or are going to have the same experience. We group them together so we can measure their properties separately. Then we can compare them to other groups.

Sounds pretty simple, right? In reality, it's a powerful concept which allows us to do rigorous A/B testing within referral campaigns.

But why is grouping people into cohorts even necessary? The answer’s simple: it's all about creating a consistent experience.

A traditional A/B test will change the experience for a group of users. Perhaps changing the colours on a page or imagery used.

Changing these elements regularly and showing different variations to a user is relatively harmless. But in referral, consistency is really important.

Imagine I offer you £20 to introduce a friend to a brand. There are two things that are really important to you:

- You want to be confident that if you share with a friend tomorrow (or next week), that you'll get your £20

- If you promise a friend £20 for following your referral link, it's very important they have a positive experience and receive £20 too.

It would be an awful experience for you and your friend if you each only got £5.

Another important factor is you might not be ready to share today. Maybe you don't know who to share your offer with just yet. It might be months before you share with a friend.

This is why our platform has A/B testing by cohort as a cornerstone.

And you're now an expert in understanding the value of cohort testing! Congratulations 🎉

Let's look next at how an experiment runs.

Running an experiment

Our clients can experiment with many options. They can change variables like the reward, the design or the copy.

The reward itself can come in many forms, like percentage discounts, Amazon vouchers or even free gifts.

When a client has decided what they'd like to test, they'll set up an experiment in our platform and choose the group of people they want to see it. VIPs, for instance, or all new customers.

Our platform will dynamically split this group in half. These are our two cohorts - our A and our B.

We'll show people one of A or B and then see what happens next. We hope that each person who sees an offer will tell their friends.

But we can't look at the results immediately. As mentioned above, it may be weeks or months before everyone who might share has done so.

We must ensure we've shown enough people the offers - and given them time to share - before deciding if A or B is better. We may also want to wait until their friends have purchased too.

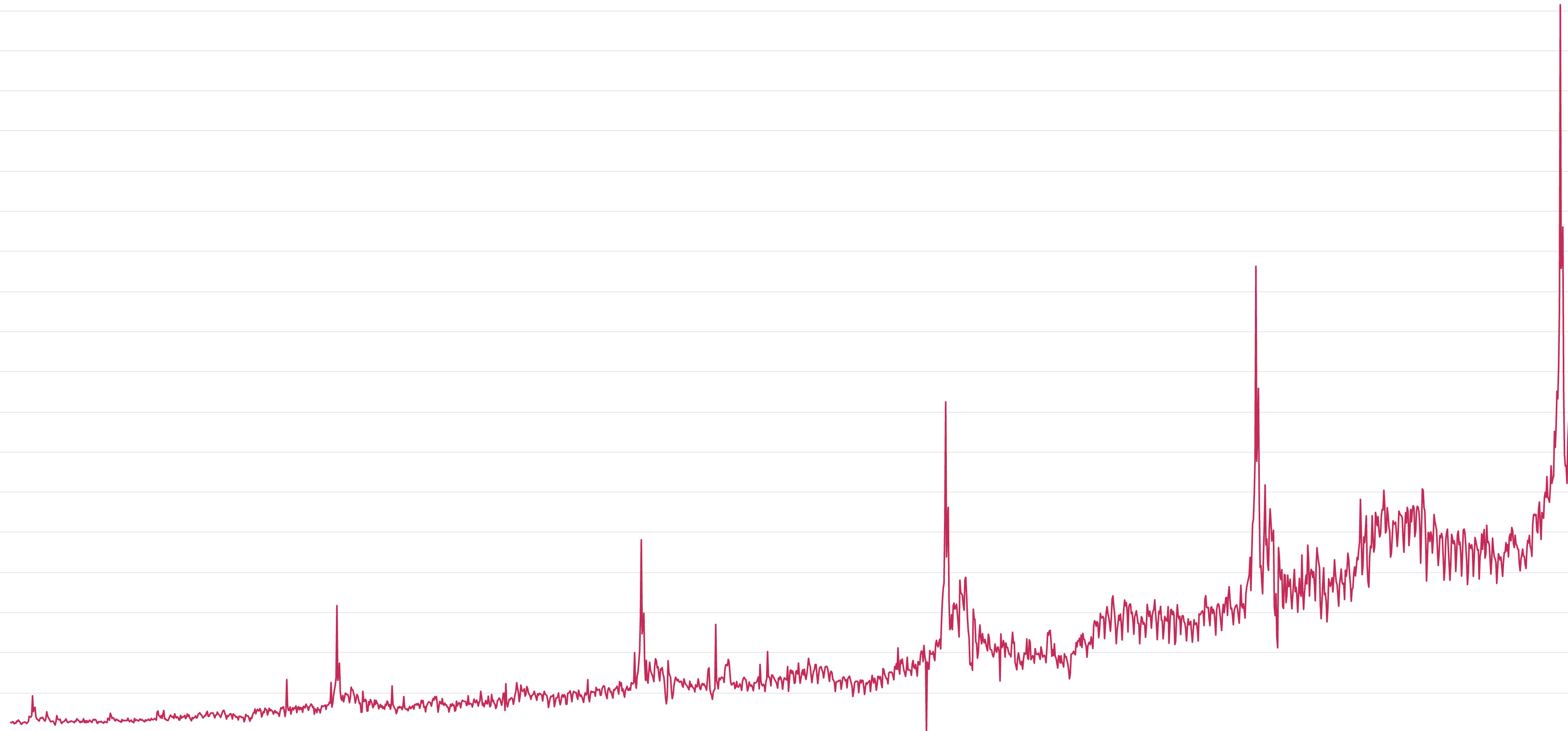

For example, A might encourage more people to share immediately. This looks good - we might have a very high share rate to begin with.

But perhaps B takes longer and encourages a more 'considered' referral. Perhaps B leads to more high-value purchases too. B might be ultimately the better choice, even though A looks better at the beginning.

Because of this, we have to ensure our platform is based on robust and fair statistical analysis.

This will mean high volume clients can experiment much faster, while very low volume clients will struggle to test as quickly. This is why the majority of our clients are established companies and we don't cater for the long tail of smaller companies.

We've glossed over an important part of our platform here. Our reporting platform processes millions of events and converts them into gorgeous funnels for our clients to learn from. It is worthy of a blog post (or two) in itself. Watch this space for future articles 👀

In the meantime, if you're interested in the statistical challenges of A/B testing there are many great articles available. Try this one: Peeking problem – the fatal mistake in A/B testing and experimentation - GoPractice!

What's the challenge?

What we've described so far doesn't sound too difficult. Put a group of people in A and a group in B - wait - and then measure the results.

There are two important parts that make this difficult:

- Doing it at scale

- Time

The first challenge is we have over 450+ clients. Each client is running numerous A/B tests at any one time with potentially hundreds of thousands of people in each cohort.

Each variant has different copy, languages, designs and rewards. It's very easy to find yourself with a combinatorial set of variables to manage.

And that's before we've even counted up the billions of referral events we have.

The second challenge is that as time goes on we have to keep careful track of which offers are still valid and which ones are no longer relevant. We can't 'just change it'. Adding a new feature (or removing one) needs careful consideration.

We've only touched the surface here. There are some further great product questions we try to answer in our platform:

- What happens when an offer ends? Can I still refer my friends?

- What happens if a client wants to end an offer early? How do you move 100k people to a new offer - given consistency is key?

- Can you be part of multiple cohorts? Which reward will someone get if you are?

- When A wins, can we automate ending the A/B test and switching to A? How should that affect people in B?

Questions like this are why we frequently ask, "Referral, how hard can it be?"

If you think you have a good answer - we'd love to chat. Tell us your ideas in the contact form below.

Tim Boughton

Read more >

Never miss another update

Subscribe to our blog and get monthly emails packed full of the latest marketing trends and tips